Python workflow orchestration: 4 open-source tools to know

See why workflow orchestration matters for your enterprise today, and get a rundown of four workflow orchestration tools that use Python.

Python plays a central role in modern data workflows — and for good reason. As teams build more advanced pipelines, automation alone can’t keep up. You need orchestration to coordinate multiple tasks and optimize data flow across systems.

Workflow orchestration tools can help your team move beyond cobbled-together scripts and into reliable, repeatable systems. Whether you’re working in data science, DevOps or software development, a Python framework for orchestration gives you the flexibility and power to manage complex workflows at scale.

What is workflow orchestration?

Workflow orchestration is managing how tasks run across a system, mainly when they depend on each other. It goes beyond running a script or cron job. Instead, orchestration tools connect all the pieces of a workflow: calling APIs, moving data, executing functions and handling failures when things go sideways.

Imagine a recipe. Automation is chopping one vegetable. Orchestration is making the full dish, so that everything is cooked in the right order for the right amount of time.

Similarly, automation runs one task, while orchestration connects many tasks together into a scalable process. A good orchestration system will support retries, notifications, error handling, real-time monitoring and task visualization.

Many Python-based tools let you create workflows using code, drag-and-drop builders or YAML templates.

The Python workflow framework: A go-to for automation

There’s a reason so many orchestration frameworks are built on Python. It’s flexible, widely used and packed with libraries that simplify workflow design. You can manage dependencies, trigger events and integrate with platforms like AWS, Kubernetes or Microsoft — without switching languages.

Thanks to Python’s open-source ecosystem, you can find orchestration tools for everything from data processing to API handling and machine learning (ML) operations. A quick pip install gives you access to task schedulers, plugins, templates and connectors.

Its clear syntax and YAML support also make workflows easier to write, share and maintain. And compared to heavier Java-based systems, Python options are quicker to set up and faster to evolve, which is a huge win for teams that need to move quickly.

How to choose the right orchestration tool

There’s no universal best tool, but there is a best fit for your team — the one that fits your use cases.

- Are you building ETL jobs?

- Training ML models?

- Running jobs across cloud and on-prem systems?

Your answers will help narrow it down.

If scalability is your top concern, look for platforms that support large-scale job orchestration and have good observability features. If you’re working with a non-developer audience, a low-code or drag-and-drop interface might get more team members involved. And if integrations are key, make sure the toolkit handles webhooks, APIs and third-party services.

Don’t forget to check for real-time alerts, retries and failure handling. Also, think about flexibility: Can you extend it with Python modules or plug in microservices?

Four Python workflow orchestration tools worth knowing

Apache Airflow

Airflow is one of the most popular open-source workflow systems out there. It uses directed acyclic graphs (DAGs) to define how tasks relate to each other and in what order they should run. It’s great for batch ETL workflows and can be the backbone of complex data pipelines that span multiple environments. It works well as the backbone of a larger workflow system, especially when you pair it with existing CI/CD pipelines.

What makes Airflow stand out is its extensibility. You can build your own operators, tap into plugins and interact with its REST API. The web UI lets you monitor flows, track real-time status and debug. Airflow is a solid choice if your workflows are large or require detailed control.

Dagster

Dagster is a newer player in the orchestration space, built with a focus on data engineering. It offers a more structured way to define workflows. Instead of just chaining tasks, you model the flow of data through your pipeline. That structure makes testing and debugging much easier.

Its built-in visualization tools help you track jobs, troubleshoot errors and understand workflow history. It’s also designed for modern use cases with its event-driven architecture.

It integrates with tools like Dask and AWS and handles partitioned runs and dynamic configuration. The visual interface is beginner-friendly, while its Python-based framework appeals to teams building complex data orchestration systems from scratch.

Luigi

Developed at Spotify, Luigi is a pure Python module that shines in batch processing and job dependencies. It’s lightweight but powerful. If you’ve ever had to ensure Task B doesn’t run until Task A is complete, Luigi is your friend.

Luigi plays well with big data tools like Hadoop, Hive and Spark. It’s a great fit for teams handling large datasets or working with traditional batch job flows. Its UI shows completed tasks, errors and what’s still running — like a living to-do list for your pipeline.

Prefect

Prefect was designed as a simpler, friendlier alternative to Airflow. It features built-in retries, smarter error handling and a clean Python API. You can run workflows on your infrastructure or use Prefect Cloud, their managed cloud service.

The dashboard gives teams visibility into task states, logs and runtime performance. Prefect is well-suited for data science and business users who want orchestration without the hassle of managing a heavy system. It also supports event-based triggers, real-time alerts and integrations with platforms like Slack.

When Python isn’t enough: Choose effortless orchestration

Open-source tools offer speed and flexibility, but they can also bring challenges as complexity grows. Managing multiple frameworks, keeping everything in sync and scaling securely can be a pain.

That’s where ActiveBatch comes in. It’s a full-featured workflow orchestration platform designed for large, complex environments. With support for Java, APIs, cloud services and microservices, ActiveBatch lets you unify all your workflows, whether they live in code, YAML or drag-and-drop builders.

With ActiveBatch, you can:

- Trigger Python jobs based on real-time events

- Pass variables across workflows

- Monitor everything in one dashboard

- Handle governance and audit needs

It’s a single platform built for IT but user-friendly enough for Ops teams, data scientists and business analysts. Book a demo to see how ActiveBatch can simplify your orchestration strategy.

Python workflow framework and orchestration tools

Automation and orchestration may seem similar, but they serve distinct roles in IT and business operations. Automation handles individual tasks — like backing up a file or sending an email — without needing a person to step in. Orchestration, on the other hand, connects those tasks into a complete process. Think of automation as a single gear turning, while orchestration is the full machine working together. Orchestration manages timing, dependencies, errors and alerts, so everything runs smoothly from start to finish.

Modern workflow automation platforms also provide visual workflow builders, real-time monitoring and Slack alerts to resolve bottlenecks quickly.

Discover why digital businesses require the orchestration of end-to-end, automated processes to accelerate data and business results.

A workflow orchestration tool is a type of scheduler or platform that automates and manages sequences of tasks within a larger process. It’s essentially a choreographer, handling task dependencies, error handling, logging and alerting, so workflows run in the correct order and recover from issues when they arise.

Many support cloud-native execution, YAML configuration and drag-and-drop design to reduce the need for custom code. Modern orchestration tools also offer connectors for fast data integration and built-in support for task management across hybrid systems. Whether you’re scheduling ETL jobs or syncing cloud services, these tools help you scale without breaking things.

Learn more about job orchestration tools and how they enable IT to string automated jobs into end-to-end processes.

Orchestration in Python means using the language’s libraries, modules and frameworks to manage and automate sequences of tasks across systems or datasets. It’s popular because Python makes it easy to define what should happen, when and under what conditions. Libraries like Celery and Airflow let you build workflows that define functions, dependencies and triggers using Python code or YAML templates. With support for APIs, real-time notifications and scalable execution, Python-based orchestration can power both simple jobs and complex workflow automation scenarios.

Discover the role of job orchestration frameworks in enhancing task execution and efficiency within digital operations.

Extract, transform, load (ETL) tools and orchestration platforms both contribute to streamlined workflows, but they solve different problems. ETL tools are built for data: pulling it from one place, transforming it and loading it somewhere else. They’re great for that specific purpose.

Orchestration tools are more comprehensive. They manage the flow between ETL steps, API calls, approval processes and other automated tasks. They might run an ETL job, but they also handle other tasks around it, like sending alerts, kicking off follow-up steps or waiting for approvals.

Learn how combining ETL automation with full-process orchestration helps eliminate silos and increase agility in enterprise data pipelines.

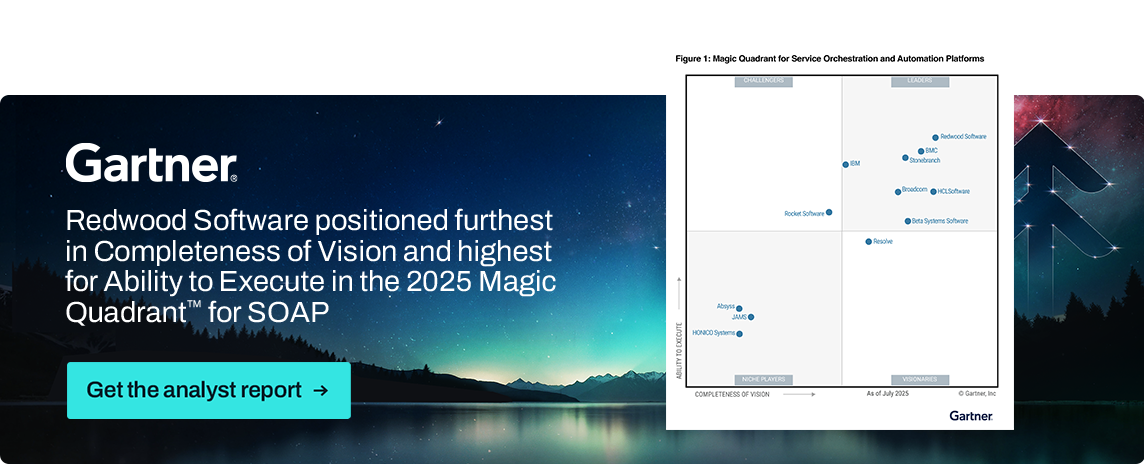

GARTNER is a registered trademark and service mark of Gartner, Inc. and/or its affiliates in the U.S. and internationally, and MAGIC QUADRANT is a registered trademark of Gartner, Inc. and/or its affiliates and are used herein with permission. All rights reserved.

These graphics were published by Gartner, Inc. as part of a larger research document and should be evaluated in the context of the entire document. The Gartner document is available upon request from https://www.advsyscon.com/en-us/resource/gartner-soaps-mq.